Cross Validation Techniques in Machine Learning using Python

In machine learning, we can’t simply rely on the training dataset for the performance of the model that is there might be chances that the training data may not have learnt properly which can lead to incorrect predictions for the independent or real-world data. In technical terms, this is called high bias of the model we need a model which has low bias and low variance.

To counter this problem, we use the technique called as “Cross Validation” which is used to measure performance of machine learning models. It takes subsets of data to train the model and henceforth learn properly all the patterns present in the data which ultimately improves performance of the model.

Table of content

Types of cross validation

Exhaustive cross validation

Leave p out cross validation

Leave one out cross validation

Non- Exhaustive cross validation

Hold Out Method

K Fold cross validation

Stratified K Fold cross validation

Conclusion

In practical terms, when we introduce cross validation, we want to see which set of input data is better for predicting the predicted values of the new data. Then we will want to include some data which is similar to the original data and some data which is totally different to the original data to check if the new data is more similar to the original data.

Example to explain Cross Validation

This problem can be simplified if we take an example of predicting the weather forecast. Let’s say, we want to predict the weather and we have a set of data that is like current weather but is actually data from different weather forecast. What we can do is to take a set of data similar to the original data, add some data from the different weather forecast and check if the new set of data is better than the original data.

With this technique, we can find the set of the input data that is most similar to the new set of data and then put a positive weight to this set and the set of data which is least similar to the original data. This way, we can increase the confidence that our predicted values for new data are similar to the original.

Types of Cross validation

There are two types of cross-validation techniques.

Exhaustive Cross Validation: Exhaustive cross-validation methods are cross-validation methods which takes all possible ways of splitting train and validation data into consideration.

Non-exhaustive cross-validation: Non-exhaustive cross validation methods do not compute all ways of splitting the original sample.

Exhaustive Cross Validation

Leave p-out cross validation

In this method, we take p number of points from the dataset having n number of points. We train the model on (n-p) number of data points while validation is done on p number of points. This process is repeated for all possible combinations of p data points from the dataset. The performance of the model is calculated by taking the average of all the accuracies obtained in each iteration.

from sklearn.model_selection import LeavePOut

import numpy as ny

X = ny.array([[5,4], [7,11], [8,4], [1,9],[9,11]])

y = ny.array([1,3,4,7,9])

# Note : A leave p out behaves similar to a leave one out when p=1

lpo = LeavePOut(1)

for train_index, test_index in lpo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

lpo = LeavePOut(2)

for train_index, test_index in lpo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4] TEST: [0 1]

TRAIN: [1 3 4] TEST: [0 2]

TRAIN: [1 2 4] TEST: [0 3]

TRAIN: [1 2 3] TEST: [0 4]

TRAIN: [0 3 4] TEST: [1 2]

TRAIN: [0 2 4] TEST: [1 3]

TRAIN: [0 2 3] TEST: [1 4]

TRAIN: [0 1 4] TEST: [2 3]

TRAIN: [0 1 3] TEST: [2 4]

TRAIN: [0 1 2] TEST: [3 4]

Leave one-out cross validation

This is the particular case of leave p out cross validation when p=1. In this method, we perform training on n number of data-points that is training is performed on all the data points present in the dataset.

from sklearn.model_selection import LeaveOneOut

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

Non- Exhaustive Cross Validation

Holdout Method

This is the simplest validation technique in which we divide our entire dataset into training data and testing data. We train the model on training data and evaluate the performance on testing data. Generally, we take 70:30 or 75:25 ratio for training and testing data. This model is computationally efficient but it results in high variance because it’s not certain which data point will end up in validation set.

K Fold Cross Validation

In this method, dataset is divided into k number of subsets and holdout method is repeated k number of times. The steps involved in the process are:

Random split of the data.

Training will be performed on (k-1) folds and testing will be done on kth fold of the data.

Repeat this process for k number of times

Accuracy is calculated by taking out average of all the accuracies obtained in each iteration.

It takes all the possible combinations of train and test data form our dataset. Hence, this results in low biased model. Consider a dataset having 1000 records that is 1000 data points are there in the dataset. Now let us take K=5 that is we have 5 number of iterations or folds for our validation purpose. For first iteration, 200 data points will be the test data and remaining 800 data points will be training data. Similarly for second iteration, new 200 data points will be considered as test data and remaining 800 data points will be considered as training data. This process will go on for K number of times (here k=5). In this way, our model is able to learn all the patterns in the data.

This method results in significantly low bias and low variance as it considers nearly all the data points for training and testing purpose.

from sklearn.model_selection import KFold

kf = KFold(n_splits=2)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 4] TEST: [0 1 2]

TRAIN: [0 1 2] TEST: [3 4]

kf = KFold(n_splits=3)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4] TEST: [0 1]

TRAIN: [0 1 4] TEST: [2 3]

TRAIN: [0 1 2 3] TEST: [4]

# Note : when number of split = number of record, Kfold behaves as leave one out cv

kf = KFold(n_splits=5)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

Stratified K Fold Cross Validation

Though k fold cross validation takes all the data points into account but there is problem associated with it. Consider a binary classification model. A problem may arise during the cross validation if the model gets trained on only one of the class during the Kth iteration. This mainly occurs when we have an imbalanced dataset. To counter this problem, we use another technique called Stratified K fold cross validation which considers nearly equal percentage of the target class in each iteration.

from sklearn.model_selection import StratifiedKFold

X = ny.array([[10,20,30], [40,50,60], [70,80,90], [100,110,120],

[5,15,25], [35,45,55], [65,75,85], [95,105,115]])

y = ny.array([0, 0, 1, 1, 0, 1, 0, 0])

skf = StratifiedKFold(n_splits=2)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 5 6 7] TEST: [0 1 2 4]

TRAIN: [0 1 2 4] TEST: [3 5 6 7]

skf = StratifiedKFold(n_splits=3)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 4 5 6 7] TEST: [0 1 2]

TRAIN: [0 1 2 5 7] TEST: [3 4 6]

TRAIN: [0 1 2 3 4 6] TEST: [5 7]

skf = StratifiedKFold(n_splits=4)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4 5 6 7] TEST: [0 1]

TRAIN: [0 1 3 5 6 7] TEST: [2 4]

TRAIN: [0 1 2 4 5 7] TEST: [3 6]

TRAIN: [0 1 2 3 4 6] TEST: [5 7]

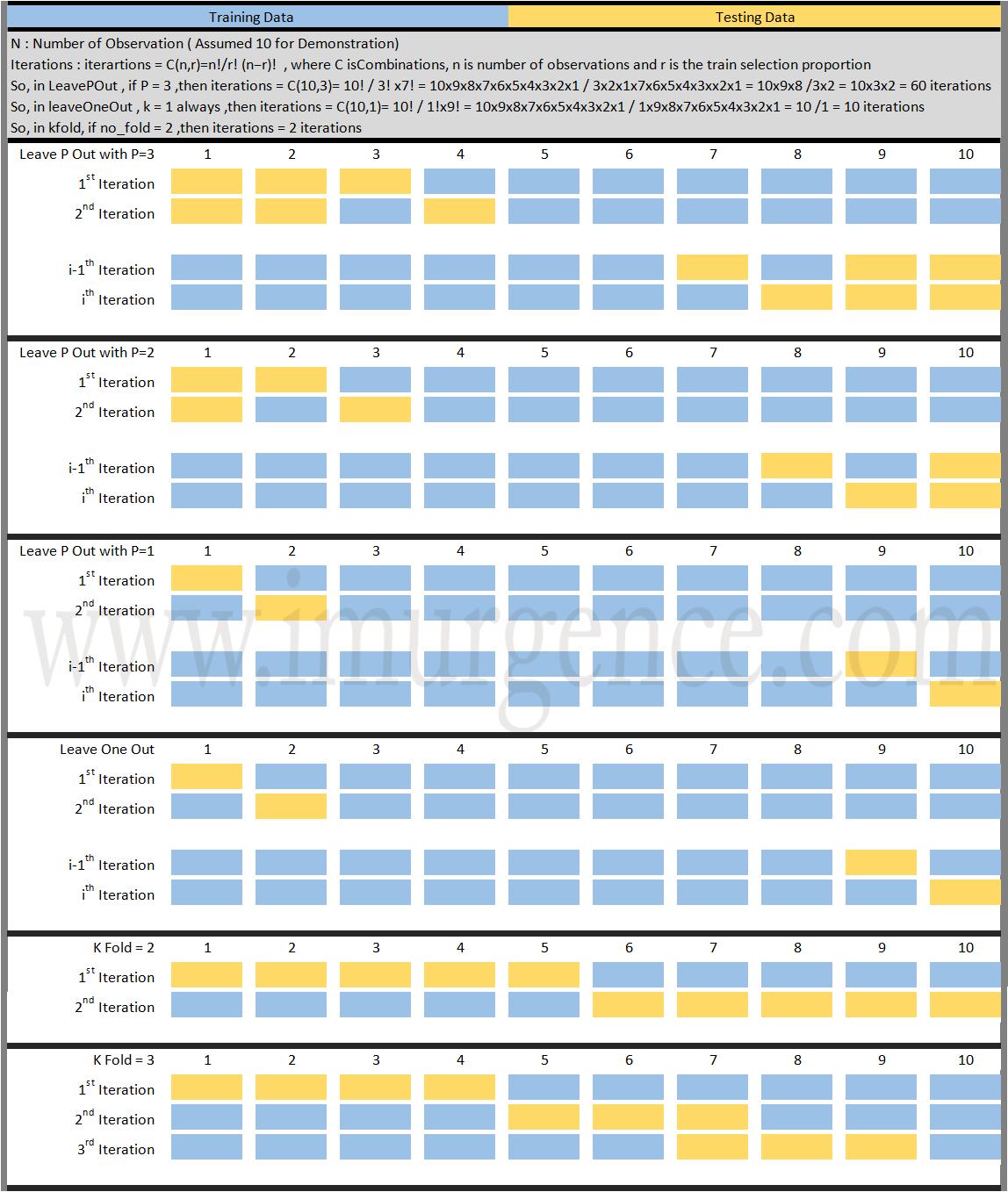

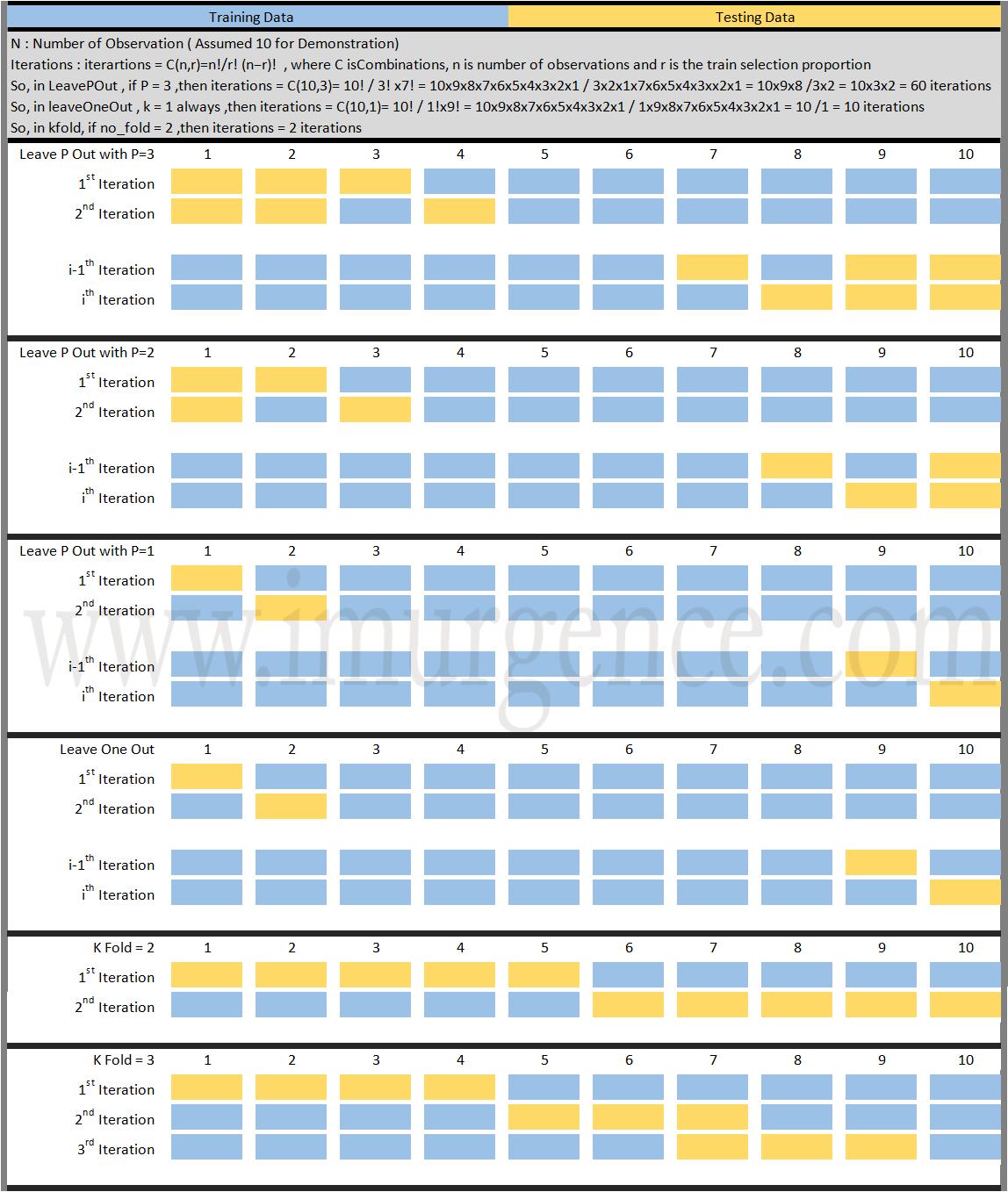

Figure : Cross Validation Illustration

Conclusion

In this blog, we went through various cross validation techniques. These techniques play an important role in determining overall performance of the machine learning model. Mainly, Non-exhaustive cross validation techniques are used in machine learning. It helps in avoiding overfitting problem and selection of ML algorithm for a particular problem. If you want to refer to a implementation of cross validation techniques in a case study then I would recommend to read How to Use Hyperparameter Tuning in Python Machine Learning.

About the Author's:

Sauhard Tripathi

Sauhard is currently pursuing Master’s of operational research from Delhi University and has done his graduation in Mathematics. With a keen interest in Data science and background in Statistic's, he want's to make it big into Data Science and Machine Learning field.

Mohan Rai

Mohan Rai is an Alumni of IIM Bangalore , he has completed his MBA from University of Pune and Bachelor of Science (Statistics) from University of Pune. He is a Certified Data Scientist by EMC. Mohan is a learner and has been enriching his experience throughout his career by exposing himself to several opportunities in the capacity of an Advisor, Consultant and a Business Owner. He has more than 18 years’ experience in the field of Analytics and has worked as an Analytics SME on domains ranging from IT, Banking, Construction, Real Estate, Automobile, Component Manufacturing and Retail. His functional scope covers areas including Training, Research, Sales, Market Research, Sales Planning, and Market Strategy.

In machine learning, we can’t simply rely on the training dataset for the performance of the model that is there might be chances that the training data may not have learnt properly which can lead to incorrect predictions for the independent or real-world data. In technical terms, this is called high bias of the model we need a model which has low bias and low variance.

To counter this problem, we use the technique called as “Cross Validation” which is used to measure performance of machine learning models. It takes subsets of data to train the model and henceforth learn properly all the patterns present in the data which ultimately improves performance of the model.

Table of content

- Types of cross validation

- Exhaustive cross validation

- Leave p out cross validation

- Leave one out cross validation

- Non- Exhaustive cross validation

- Hold Out Method

- K Fold cross validation

- Stratified K Fold cross validation

- Conclusion

In practical terms, when we introduce cross validation, we want to see which set of input data is better for predicting the predicted values of the new data. Then we will want to include some data which is similar to the original data and some data which is totally different to the original data to check if the new data is more similar to the original data.

Example to explain Cross Validation

This problem can be simplified if we take an example of predicting the weather forecast. Let’s say, we want to predict the weather and we have a set of data that is like current weather but is actually data from different weather forecast. What we can do is to take a set of data similar to the original data, add some data from the different weather forecast and check if the new set of data is better than the original data.

With this technique, we can find the set of the input data that is most similar to the new set of data and then put a positive weight to this set and the set of data which is least similar to the original data. This way, we can increase the confidence that our predicted values for new data are similar to the original.

There are two types of cross-validation techniques.

- Exhaustive Cross Validation: Exhaustive cross-validation methods are cross-validation methods which takes all possible ways of splitting train and validation data into consideration.

- Non-exhaustive cross-validation: Non-exhaustive cross validation methods do not compute all ways of splitting the original sample.

In this method, we take p number of points from the dataset having n number of points. We train the model on (n-p) number of data points while validation is done on p number of points. This process is repeated for all possible combinations of p data points from the dataset. The performance of the model is calculated by taking the average of all the accuracies obtained in each iteration.

from sklearn.model_selection import LeavePOut

import numpy as ny

X = ny.array([[5,4], [7,11], [8,4], [1,9],[9,11]])

y = ny.array([1,3,4,7,9])

# Note : A leave p out behaves similar to a leave one out when p=1

lpo = LeavePOut(1)

for train_index, test_index in lpo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

lpo = LeavePOut(2)

for train_index, test_index in lpo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4] TEST: [0 1]

TRAIN: [1 3 4] TEST: [0 2]

TRAIN: [1 2 4] TEST: [0 3]

TRAIN: [1 2 3] TEST: [0 4]

TRAIN: [0 3 4] TEST: [1 2]

TRAIN: [0 2 4] TEST: [1 3]

TRAIN: [0 2 3] TEST: [1 4]

TRAIN: [0 1 4] TEST: [2 3]

TRAIN: [0 1 3] TEST: [2 4]

TRAIN: [0 1 2] TEST: [3 4]

This is the particular case of leave p out cross validation when p=1. In this method, we perform training on n number of data-points that is training is performed on all the data points present in the dataset.

from sklearn.model_selection import LeaveOneOut

loo = LeaveOneOut()

for train_index, test_index in loo.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

This is the simplest validation technique in which we divide our entire dataset into training data and testing data. We train the model on training data and evaluate the performance on testing data. Generally, we take 70:30 or 75:25 ratio for training and testing data. This model is computationally efficient but it results in high variance because it’s not certain which data point will end up in validation set.

In this method, dataset is divided into k number of subsets and holdout method is repeated k number of times. The steps involved in the process are:

- Random split of the data.

- Training will be performed on (k-1) folds and testing will be done on kth fold of the data.

- Repeat this process for k number of times

- Accuracy is calculated by taking out average of all the accuracies obtained in each iteration.

It takes all the possible combinations of train and test data form our dataset. Hence, this results in low biased model. Consider a dataset having 1000 records that is 1000 data points are there in the dataset. Now let us take K=5 that is we have 5 number of iterations or folds for our validation purpose. For first iteration, 200 data points will be the test data and remaining 800 data points will be training data. Similarly for second iteration, new 200 data points will be considered as test data and remaining 800 data points will be considered as training data. This process will go on for K number of times (here k=5). In this way, our model is able to learn all the patterns in the data.

This method results in significantly low bias and low variance as it considers nearly all the data points for training and testing purpose.

from sklearn.model_selection import KFold

kf = KFold(n_splits=2)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 4] TEST: [0 1 2]

TRAIN: [0 1 2] TEST: [3 4]

kf = KFold(n_splits=3)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4] TEST: [0 1]

TRAIN: [0 1 4] TEST: [2 3]

TRAIN: [0 1 2 3] TEST: [4]

# Note : when number of split = number of record, Kfold behaves as leave one out cv

kf = KFold(n_splits=5)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [1 2 3 4] TEST: [0]

TRAIN: [0 2 3 4] TEST: [1]

TRAIN: [0 1 3 4] TEST: [2]

TRAIN: [0 1 2 4] TEST: [3]

TRAIN: [0 1 2 3] TEST: [4]

Though k fold cross validation takes all the data points into account but there is problem associated with it. Consider a binary classification model. A problem may arise during the cross validation if the model gets trained on only one of the class during the Kth iteration. This mainly occurs when we have an imbalanced dataset. To counter this problem, we use another technique called Stratified K fold cross validation which considers nearly equal percentage of the target class in each iteration.

from sklearn.model_selection import StratifiedKFold

X = ny.array([[10,20,30], [40,50,60], [70,80,90], [100,110,120],

[5,15,25], [35,45,55], [65,75,85], [95,105,115]])

y = ny.array([0, 0, 1, 1, 0, 1, 0, 0])

skf = StratifiedKFold(n_splits=2)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 5 6 7] TEST: [0 1 2 4]

TRAIN: [0 1 2 4] TEST: [3 5 6 7]

skf = StratifiedKFold(n_splits=3)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [3 4 5 6 7] TEST: [0 1 2]

TRAIN: [0 1 2 5 7] TEST: [3 4 6]

TRAIN: [0 1 2 3 4 6] TEST: [5 7]

skf = StratifiedKFold(n_splits=4)

for train_index, test_index in skf.split(X, y):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

TRAIN: [2 3 4 5 6 7] TEST: [0 1]

TRAIN: [0 1 3 5 6 7] TEST: [2 4]

TRAIN: [0 1 2 4 5 7] TEST: [3 6]

TRAIN: [0 1 2 3 4 6] TEST: [5 7]

Figure : Cross Validation Illustration

Figure : Cross Validation Illustration

In this blog, we went through various cross validation techniques. These techniques play an important role in determining overall performance of the machine learning model. Mainly, Non-exhaustive cross validation techniques are used in machine learning. It helps in avoiding overfitting problem and selection of ML algorithm for a particular problem. If you want to refer to a implementation of cross validation techniques in a case study then I would recommend to read How to Use Hyperparameter Tuning in Python Machine Learning.

About the Author's:

Figure : Cross Validation Illustration

Figure : Cross Validation Illustration

Write A Public Review